DOGE and the Perils of an AI Driven Workforce

"An expert is someone who has a good explanation for why they were wrong"

The tariffs are of course the big story. I am not here to discuss them. I am here to discuss the process by which we arrived here and how it fits in to the larger AI discussion. To catch everyone up (and boy do I envy people who manage to maintain ignorance these days), yesterday President Trump unveiled a system of tariffs that are sending economic shockwaves around the world and at best can be described as “What would happen if you ran the country like a 5 year old who was mad that they were losing at Monopoly.” There is a lot of discussion on why, precisely, this move is so economically illiterate, but the thing that prompted my writing was seeing this:

It seems increasingly obvious that Trump/Musk has brought in a group of inexperienced prompt engineers using AI to “help fix the country.” Lest you think this is just a one-off or coincidence:

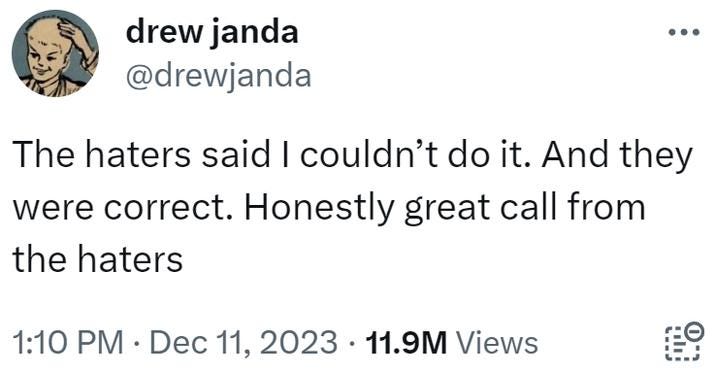

AI is a hot topic of discussion these days. There are two camps that I will call “maximalists” and “haters”. The haters believe that AI is an ouroboros of slop that just creates more slop, degrading itself each iteration, and is a scam foisted on us by the silicon valley venture capital cretins. The maximalists like Sam Altman and Marc Andreessen believe AI is the key to a post-scarcity world where AI represents the entirety of the workforce freeing humans to party at Ibiza. There is also a quiet third camp that I - and from my informal polling, most people who work in AI - fall into which is somewhere in the middle of these views, leaning towards the maximalist side. However there is one key thing that I am planting my flag in: No matter how good AI gets, we will always need skilled humans to vet their work. What this means for the larger workforce is murky, but no matter what we will need some people with skills to ensure things are being done properly. Here’s a simple example of why:

Lets say you are setting up an economic policy and need to math out some parameters to make sure it will hit some threshold that will ensure it is beneficial. So you ask the AI “What is 6 times 5 plus 10 divided by 2 minus 4?” Those of us with a high school math education will remember their “Please Excuse My Dear Aunt Sally” order of operations and will evaluate 6 * 5 + 10 / 2 - 4 as 6 * 5 (30) plus 10 / 2 (5) minus 4 and come out with the answer of 31. Indeed, this is what the AI will give you.

But what if you don’t have a high school math education, don’t understand what it means by order of operations, and you really meant “What is 6 * (5 + 10) / 2 - 4?” In this case the answer would be 41. If you really meant “What is 6 * ((5 + 10) / 2 - 4)” you would end up with 21. Unless you understand the question you are asking, at a fundamental level, you have no way of knowing if the answer you got is correct, or even if you are formulating the question properly.

The thing about AI in its current (and likely future) state is that no matter what answer it gives, it gives it in a confident manner that obscures any doubt it might have to the answer. It is only if you are skilled in the domain and can call it out on its mistakes that it will admit it was wrong, with a cloying cheeriness that would make Douglas Adams wince. Recently I have been working in an unfamiliar game engine and have been leaning on AI to complete my tasks. It has been wrong a lot. Here’s a smattering of times I have had to correct it to get a better answer:

When it told me to use a Node2D object in my ScrollContainer

When it told me to use a Viewport object to render my buttons

But you can’t create a Viewport node in the scene tree, I replied! Its abstract!

After much back and forth I was able to coax a correct answer out of the AI and get my scrollable pane working. But I am a gameplay programmer with almost 20 years of experience. I am as close as you can get to an “expert” on the subject. If I were in my 20s, fresh out of college? I would have had no chance of landing on the correct solution. All the AI in the world won’t help if you don’t have a solid understanding of the ground you are walking on.

Here’s the dirty secret about being an expert: you are still often wrong. Sometimes you are wrong because of bad input - garbage in, garbage out. Sometimes you are wrong because of faulty assumptions. Sometimes you are wrong because its early in the morning and you haven’t had your coffee. But, and this is the key, being an expert means you are capable of identifying mistakes, understanding the underlying error, and correcting it. This is an important part of everyone’s work! When you have AI and prompt engineers, there is nobody who can look at an answer and see if it passes the smell test.

This brings us back to DOGE. What we are seeing here is a case study in AI maximalism with no expert oversight. A group of unskilled techies who think they can code their way through every problem and the experts are just wet blanket nuisances that they can bypass, and as a result are stepping on every rake imaginable and appear on track to annihilate our economy as well as the United States’ century of global dominance. People who mistake technical skill with computers for generalized skill to solve all the problems.

All of this is to say that, while it is still early, it appears there is no hope for the maximalist dream of a society with an entirely AI driven workforce. We will always need an educated human workforce to ensure things are running properly. Score one for the haters.

I agree with Tulley that we do now and will still need experts in the room. I also believe that it is valuable to learn how to use AIs to understand both sides of public policy debates and challenge our own assumptions.

Inspired by this thoughtful piece, I chatted with my new favorite AI, ChatGPT o3-mini for a little while. I concluded from this conversation that our current leadership would have benefited from even more conversation with AIs. Specifically, AIs could have explained:

* How tariffs contribute to inflation,

* Their recommendation of using a balanced approach including "investments in R&D, workforce development, modernized infrastructure, smart trade agreement negotiations, targeted tariffs, and regulatory reforms" to reduce US trade deficits with other countries, and

* The importance of continually challenging one's assumptions for both fundamental science as well as for economic policy.

Below is a somewhat longer summary of my conversation with ChatGPT.

Tariffs and Trade: We discussed how tariffs can raise costs and contribute to inflation by making imported goods more expensive, potentially leading to cost-push effects and supply chain disruptions.

Trade Deficit Reduction Strategies: A range of policy options were explored—from boosting domestic competitiveness and investing in innovation and infrastructure to revising trade agreements and adjusting fiscal policies—to help reduce U.S. trade deficits.

Policy Recommendations for Unified Control: If unified control of the White House, House, and Senate were achieved, a balanced approach would include investments in R&D, workforce development, modernized infrastructure, smart trade agreement negotiations, targeted tariffs, and regulatory reforms.

The Scientific Process and Challenging Assumptions: We reviewed how the scientific method involves testing, falsification, and the continual reexamination of assumptions, which is crucial for improving theories and models.

Application to Economics: The importance of challenging assumptions was extended to economics, noting that empirical testing, data analysis, and adaptive policymaking help refine economic models and improve economic policy in the face of real-world complexities and uncertainties.

For the full conversation, please go to https://docs.google.com/document/d/1CfmNku6EJIGpSXq5YldcW078jbLjKD9yZpHE6eGQpZs/edit?usp=sharing